I was asked to lead a discussion on the current status of 3D printing in the humanities, particularly the field of history with a great group of fellow PhD students here at GMU during one of their classes.

Here is what we came up with.

First of all, 3D printing (in general, but specifically for history) can be summarized by the following formula.

3D printing for history now = HTML for history in the early 1990's

There is much that can be done, but using a 3D printer for historical research, study, learning, etc, is still very much in a nascent stage. So the question are, what can be done with 3D printing and how does it help us learn about history? We came up with a few ideas.

First, what can we print with a 3D printer? The limits are just about endless, as long as then are condensed to a 5-inch x 11-inch x 12-inch box.

The bigger question is what do 3D printed objects help us learn about history? Here we had some good ideas. Printing buildings to scale, along with figurines, can help us determine the scale of real life objects. Determining scale can help us analyze why some things are larger than others, for instance monuments. Why would the Lincoln monument be larger than the Jefferson, and what does that say about our views (or the creators views) about the subject? Life size objects can show true size that are often distorted or masked when never seen in person, like the Mona Lisa, for example, which is remarkably small.

Preserving rare, fragile, or expensive artifacts has obvious benefits in that it keeps things from getting lost, broken or stolen. 3D historical prints also put physical objects in the hands of students, especially those who might never have the opportunity to handle a real civil war cannonball, a Roman sword, a model of the Antikythera Mechanism, or a scale model of the Titanic. A physical object also offers the additional learning opportunity of tactile feedback over images in a book or on screen.

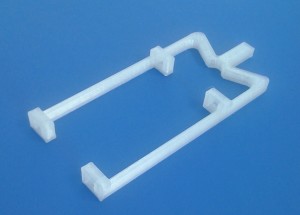

3D printing also offers the opportunity to create inventions that may never have made it into production, such as those from old patents. We even got to look at one, a chop-stick holder from 1967.

Using a 3D printer and associated hardware and software in a history classroom provides yet another opportunity to combine multiple disciplines in an educational atmosphere. Everybody benefits when science, engineering, math, technology and the humanities combine (as was noted about a high school class that built a trebuchet).

We also talked about the ramifications of 3D printing on the future. Interestingly, similar issues that have voiced throughout history to the introduction of new technology, were also raised during the discussion. What happens when we move production of items back to the individual and away from factories? How do we cope with the replacement of humans by technology?

At present, the cost to own a printer in your home is still a bit much, but definitely within reach. Three different printers range from $800 (the do-it-yourself RepRap) to $2500 (Makerbot Replicator 2), with a middle priced option by Cubify at $1500. Filament, the plastic line used to create objects, costs around $50 a spool.

Items printed:

http://www.thingiverse.com/thing:22849 – chopstick holder

http://www.thingiverse.com/thing:32413 – Antikythera machine

Have an idea how 3D printers can be used in education? Add a comment below.