Doh!

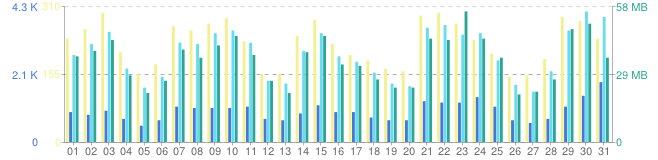

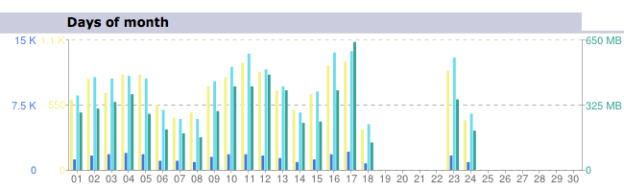

Sometimes AWStats will miss some days in calculating stats for your site, and that leaves a big hole in your records. Usually, as in my case, it’s because I messed up. I reinstalled some software on our AWStats machine, and forgot to reinstall cron. Cron is the absolutely necessary tool for getting the server to run things on a timed schedule. I didn’t notice this until several days later, leading to a large gap in the stats for April.

What to do?

Fortunately, there is a fix. Unfortunately, it’s a bit labor intensive, and depends on how you rotate your apache logs (if at all, which you should). The AWStats Documentation (see FAQ-COM350 and FAQ-COM360) has some basic steps to fix the issue, outlined below:

- Move the AWStats data files for months newer to a temporary directory.

- Copy the Apache logs with all of the stats for the month with the missing days to a temporary directory.

- Run the AWStats update tool, using AWStat’s logresolvemerge tool and other changed paramaters, to re-create the AWStats data file for that month

- Replace the AWStats data files for the following months (undo step 1).

The Devil’s in the Details

Again, depending on how you have Apache logs set up, this can be an intensive process. Here’s how I have Apache set up, and the process I went through to get the missing days back into AWStats.

We have our Apache logs rotate each day for each domain on the server (or sub-directory that is calculated separately). This means I’ll have to do this process about 140 times. Looks like I need to write a script…

Step 1. Move the data files of newer months

AWStats can’t run the update on older months if there are more recent months located in the data directory. So we’ll need to move the more recent month’s stats to a temporary location out of the way. So, if the missing dates are in June, and it is currently August, you’ll need to remove the data files for June, July, and August (they look like this awstatsMMYYYY.domain-name.com.txt where MM is the two digit month and YYYY is the four digit year) to a temporary directory so they are out of the way.

Step 2. Get the Apache logs for the month.

First step is to get all of the logs for each domain for the month. This will work out to about 30 or 31 files (if the month is already past), or however many days have past in the current month. For me, each domain archives the days logs in the following format domain.name.com-access_log-X.gz and domain.name.com-error_log-X.gz where the X is a sequential number. So the first problem is how to get the correct file name without having to look in each file to see if it has the right day? Fortunately for me, nothing touches these files after they are created, so their mtime (the time stamp of when they were last modified) is intact and usable. Now, a quick one-liner to grab all of the files within a certain date range and put their content in a new file.

We’ll use the find command to find the correct files. Before we construct that command, we’ll need to create a couple of files to use for our start and end dates.

touch --date YYYY-MM-DD /tmp/start

touch --date YYYY-MM-DD /tmp/end

Now we can use those files in the actual find command. You may need to create the /tmp/apachelogs/ directory first.

find /path/to/apache/logs/archive/ -name "domain-name.com-*" -newer /tmp/start -not -newer /tmp/end -exec cp '{}' /tmp/apachelogs/ \;

Now unzip those files so they are usable. Move into the /tmp/apachelogs/ directory, and run the gunzip command.

gunzip *log*

If you are doing the current month, then copy in the current apache log for that domain.

cp /path/to/apache/logs/current/domain-name.com* /tmp/apachelogs/

This puts all of the domains log files for the month into a directory that we can use in the AWStats update command

Things to note: You need to make sure that each of the log files you have just copied use the same format. You also need to make sure they only contain data for one month. You can edit the files by hand or throw some fancy sed commands at the files to remove any extraneous data.

Step 3. Run the AWStats logresolvemerge and update tool

Now comes the fun part. We first run the logresolvemerge tool on the log files we created in the previous step to create one single log file for the whole month. While in the <code>/tmp/apachelogs/</code> directory, run:

perl /path/to/logresolvemerger.pl *log* > domain-name.com-YYYY-MM-log

Now, we need to run the AWStats update tool with a few parameters to account for the location of the new log file.

perl /path/to/awstats.pl -update -configdir="/path/to/awstats/configs" -config="domain-name.com" -LogFile="/tmp/apachelogs/domain-name.com-YYYY-MM-log"

Step 4. Move back any remaining files

If you moved any of the AWStats data files (awstatsMMYYYY.domain-name.com.txt like for July and August in our example) now’s the time to move them back where they go.

Yeah, that fixed it!